ART IN THE ERA OF ARTIFICIAL INTELLIGENCE

MEIAC (Extremadura and Ibero-American Museum of Contemporary Art)

Badajoz, Spain.

February 19th - May 2nd, 2021

ART IN THE ERA OF ARTIFICIAL INTELLIGENCE

MEIAC (Extremadura and Ibero-American Museum of Contemporary Art)

Badajoz, Spain.

February 19th - May 2nd, 2021

At zero hours on January 1, 1970, a new era began: the UNIX clock began to run, on the basis of which practically all our technological devices measure time. Telephones, Internet servers, robots, ATMs, all need to establish a "now" in order to communicate, a simultaneous time of operation, and from there, a past and a future. That convention, thanks to which men and machines began to interact, is UNIX time, a system that counts the seconds elapsed since midnight at the beginning of the year 1970. The decision to use a 32-digit number - zeros or ones - means that the clock will complete a first full cycle on Tuesday, January 19, 2038, at which time this first 32-bit era will be over.

But what is it that characterizes this era, and what makes it different from other historical moments in which a particular technological breakthrough had a decisive influence?

The main difference is that the action of the digital and, principally, its engine, computer code, is not only disruptive in relation to previous technologies, but is undermining the very foundations on which society is organized. The tsunami represented by the digitization process is characterized by its incessant action of deterritorialization and decoding of all the structures it encounters in its path.

From that first message on the ARPANET at the end of 1969 to today's latest tweet, the network has gradually structured itself to become an invisible, omnipresent giant that has penetrated every corner of the planet. The changes it is bringing about, to use computer terminology, have not led to a simple "upgrade" of the system, but are forcing it to radically change its "architecture". We are witnessing how this erosive effect of the code has progressively altered - and will progressively alter - all the structures it has paradoxically come to assist, until finally replacing them with new systems. Banks, factories, public institutions, infrastructures, governments, borders, schools, museums... everything is threatened by its disruptive character, undermining hierarchies, privileges, or old conventions.

It is in this new digital machinic environment where we will have to act, and our role, as humans, is no longer the dominant one. Friedrich Kittler will say that "it is we who adapt to the machine. The machine does not adapt to us” 1. Moving the human being from the center of the scene, and focusing directly on the devices, Kittler disagrees with McLuhan and his vision of the media as prostheses of the body since, according to him, technology follows its own evolutionary path. In any case, in relation to the human senses, the German theorist considered that devices not only do not pretend to improve them, but seek to replace them, due to their greater efficiency and lower level of error.

What amazed, seduced and at the same time disturbed society 25 years ago was the proliferation and omnipresence of the screen. Both television screens and digital devices were beginning to flood private and public space. In his book Écran Total (Screened Out), Jean Baudrillard referred to the electronic image in terms of its capacity to abolish distances, eliminate opposites, make reality disappear and take its place as a simulacrum, a pure surface. An empty surface on which we project ourselves both as spectators -the television screen- and as operators -the computer screen-. However, far below this spectacular layer and almost invisibly, what was beginning to function and expand even faster than the images multiplying, was the computer code. Far away from the interface and the screen, but very close to the hardware, its structure of ones and zeros emerges from the integrated circuit without generating any sign that could be recognizable to human beings. According to Baudrillard, it will be the code that will be responsible for annulling the real to make way for "hyperreality".

But the current dynamic goes far beyond the domain of the screen. From the total screen, we have moved on to total algorithms. Cell phones, for example, ubiquitous devices, are not limited to disseminating virtual images, but are the mechanisms that connect us to various agencies, which, with their sensors, collect and transmit our data. We are no longer in the presence of a multitude of screens that emit information - or subject us to a machinic subjection - but rather we are part of a network of mechanisms that process our behavior and convert it into merchandise. Immersed in an ecosystem of algorithms that compete for our attention, we are no longer the "target" of propaganda -commercial or political-, but have become the product, the commodity.

Within this new dynamic of relations, one of the concepts that is in crisis is the notion of subject (human subject or machine subject), in the face of the dissolution of the very idea of the autonomous, closed, autopoietic body. Perhaps the most appropriate approach would be to analyze this assemblage of disparate elements between humans and machines, from the notion of agencement (assemblage).

Deleuze and Guattari define it as an interrelation of heterogeneous agents that share a territory and have a becoming. The classic example is the agencement formed by the rider, the horse, and the stirrup. This ensemble, which cannot be summed up as the sum of its parts, became a decisive weapon in medieval wars, defining, for example, the outcome of the battle of Poitiers (732), in which Charles Martel's cavalry prevailed over an enemy that, although superior in numbers, did not have this technology, definitively halting the advance of the army of Al-Andalus over Europe.

Another example of similar agencement has just emerged in our days, more precisely in December 2020, when the U.S. Armed Forces allowed artificial intelligence to act as co-pilot of a military aircraft. A milestone that for the military itself marked the beginning of a new war scenario: that of the "algorithmic warfare".

The pilot, identified as Major "Vudu" and ARTUµ 2, a modified version of μZero, a game algorithm that outperforms humans in games like chess and Go, flew a U-2 spy plane on a reconnaissance mission during a simulated missile strike. The AI's main job was to find enemy missile launchers while the human searched for hostile aircraft, both using the plane's radar. This was the first time the AI controlled a military system.

It should be noted that these agencements do not embody a new hybrid subject as the cyborg would be. It is not that our biological machines-eyes or brain, muscle or nerve-are being replaced by mechanical devices, but that there is no longer any way to draw precise boundaries to what was once our domain, our "self." Whether in our daily lives (physical or in social networks), in the production of goods or in any sphere of power, we are all part of different agencies. We contribute our data, our muscles, and our libido, and so will many other humans from different parts of the planet. Machines will also make their contribution, either through the information provided by their sensors, by the "manufacturing" of their robotic arms, and fundamentally, by the algorithms that regulate the whole process and make most of the decisions thanks to the help of their artificial neural networks, operating at microprocessor speed. All these actions, all these inputs, cuts, and flows, operate synchronized under the same measurement system: Unix time, and more and more, the decision-making role is being inexorably occupied by software.

The traditional definition of artificial intelligence (AI) is that it is intelligence carried out by machines. And this would only make sense today if we clarify that we are not referring to technical machines, but to abstract machines. To heterogeneous agencements in which pieces of hardware, artificial neural networks and, mainly, data sources coming from people that, often in real time, feed the system, are assembled. To machines made up of hardware, software, and humans.

As for the hardware, the "electronic brain", which used to occupy huge rooms and require enormous amounts of energy, has today been miniaturized and mimicked to such an extent that we can no longer distinguish which device has it and which does not. Intelligent objects with distributed reasoning, operating almost autonomously and in a network, remain hidden behind all kinds of functionalities: communication, control, entertainment, production, transportation, financial transactions, security. Household appliances, watches, cars, but also, military drones, Bitcoin mining farms or data server warehouses in the cloud, all linked by terrestrial and submarine fiber optic cables and satellites, constitute the "bone part", the material part of the AI machine.

Whereas the software, which until yesterday was only the interface to control the machine, occupies an intermediate place between hardware and humans.

Software is not a technical machine - it is clearly not hardware - but it is not human either - its binary language is incomprehensible to us: it is made of zeros and ones, of slices and electrical flows. If in the last century artificial intelligence was strongly tied to a specific object - the computer - in the Internet era, algorithms have managed to become independent of their "body" and today they roam the networks - and the clouds - voraciously processing all the information they find in their path. Wherever we look, algorithms constantly manage and organize this mass of information, unmanageable for human beings. Everything is measured and stored: from the data generated by humans themselves - through social networks, e-mail or Google searches - to the traces of their transactions - purchases, transfers, invoicing, phone calls - the traces left by their bodies - through surveillance cameras, fingerprints and DNA fingerprints - and even those generated by the machines themselves - with their location, height, pressure and sound sensors.

Artificial intelligence based on neural networks is not just a new computing tool but represents the beginning of a fundamental change in the way software is written. They are what Andrej Karpathy, the head of AI at Tesla, calls software 2.0.

Software 1.0, which is what we are all familiar with and is written in languages such as Python or C++, consists of instructions to a computer written by a programmer. When writing the code, the programmer links each specific line of the program to some desirable behavior on the machine.

Software 2.0, on the other hand, is written in neural network weights. Training a neural network consists of adjusting each of the weights of the neurons that are part of the network, so that the responses match as closely as possible the data we know. No human is involved in writing this code because there are too many weights-typical networks can have millions, and coding directly in weights is somewhat difficult. Instead, what is done is to specify certain rules according to which the program should behave, calibrate the different weights, and use the available computational resources to search for results that satisfy those constraints, trained with algorithms such as backpropagation and stochastic gradient descent.

According to Karpathy, most of tomorrow's programmers will not be engaged in making complex software developments, but will be engaged in collecting, cleaning, labeling, analyzing, and visualizing the data - produced by humans - that feed neural networks.

Consequently, since the instruction set of a neural network is relatively small, it is significantly easier to implement this software much closer to the silicon, with no intermediate layers, no operating systems. The world will change when low-power intelligence, encapsulated on small, inexpensive chips, becomes pervasive all around us. From the paradigm of the universal Turing machine (a single machine that can be used to compute any computable sequence) to ASICs (application-specific, non-programmable integrated circuits, only capable of solving a specific problem). This question is addressed by Friedrich Kittler in “There is no Software” 3. Starting from Church-Turing's thesis, and from the fact that the physical world is continuous - not discrete - and of maximum connectivity, he suggests that the only way to make the universe "computable" would be with "pure hardware" that does not need software.

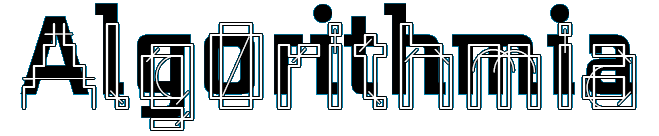

Every Icon, Software art, 1997, John F. Simon

Every Icon shows every possible combination of pixels in a 32 by 32 grid, systematically traversing every conceivable icon. The work reminds us of Borges' Aleph, "that point in space that contains all points," where infinity and eternity converge. A machinic Aleph that becomes an unattainable attempt to prove whether or not reality is computable, whether or not every action, every entity is reducible to code.

We can already see this trend in cases such as Google, which has revealed that to process RankBrain requests, its search algorithm, instead of ordinary computers based on CPUs , it only uses TPUs (tensor processing units), which are application-specific integrated circuits and AI accelerators, developed exclusively for machine learning by Google itself.

AI, although it is approaching AGI (Artificial general intelligence) 4, seems to follow a quite different path from that of human reasoning. Both artificial neural networks and quantum computing are based on analyzing a multitude of data, identifying patterns, and giving a probabilistic answer to the question received, in many cases with a precision and speed infinitely greater than that of a living being. However, in no case do they understand what their answer means. They do not understand what they are doing, they do not make a logical or mathematical reasoning -as traditional computing or a Turing machine would do-. According to Katherine Hayles, algorithmic intelligence should be understood as a form of non-conscious cognition 5, which solves complex problems without using formal languages or deductive inferences. Algorithms learn to recognize patterns without having to go through the whole chain of cause-and-effect analysis - or true or false in relation to reality - and without even having to know the content of what they are processing.

Failure to recognize patterns can lead to innocent mistakes -confusing Chihuahua dogs with chocolate muffins-, or to aberrant deviations such as the racist and sexist messages of Tay 6, Microsoft's Tweetbot or Google's image search engine, labeling black-skinned people as gorillas. Ultimately, all the information inoculated into the AI system has human origins-largely from Anglo-Saxon consumers-and therefore the same biases and prejudices.

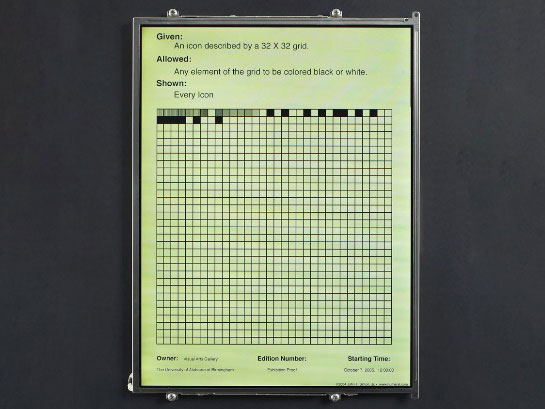

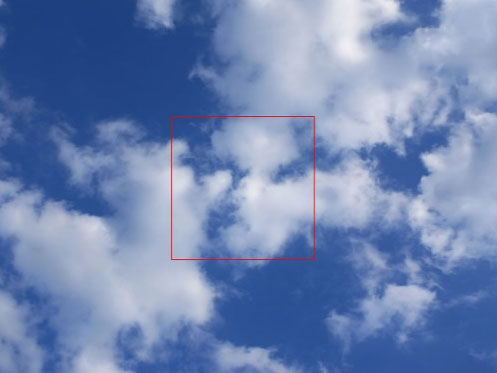

Cloud Face

Photographs, facial recognition algorithm, 2012, Shinseungback Kimyonghun

Humans often tend to lose our gaze in clouds, marble, wet spots or coffee grounds, which prompts our imagination to find all kinds of figures, whether they are faces, animals or objects. In the case of Cloud Face, the error in the communication, the glitch in the system, causes this unexpected effect, this serendipity in the "mind" of the machine. [www]

From another point of view, the ambiguity in pattern recognition was used by several artists, such as Salvador Dalí, who developed his critical-paranoid method from this effect. In his book The Tragic Myth of Millet's Angelus he states that "...the human brain, is capable, thanks to paranoiac-critical activity, of functioning as a viscous, highly artistic, cybernetic machine."

Machinic cognition is capable of some feats such as accelerating the discovery of vaccines against COVID-19 or the design of new antibiotics, but also of being the tool that allows the emergence of fake news farms, Twitterbots, or automatic pages to hunt clicks and position themselves among the first results in Internet search engines. Using NLP (natural language processing) algorithms such as GTP-2 or the not yet released GPT-3, it is already possible to write artificial texts that are indistinguishable from those created by a person.

Predictive Art Bot

Natural Language Processing, website, installation,

Disnovation.org

This work puts into action an algorithm that converts the latest media headlines into artistic concepts, bypassing the human creative process. But beyond mere automation, AI manages to surprise us with plausible but counter-intuitive and even puzzling associations of ideas. [www]

Máquina Cóndor (Condor Machine)

Poetry Machine, 2006 -in process, Demian Schopf

The machine generates mortuary poems that, in the artist's words, "respond to cause and effect relationships where war and economy, bios, polis and logos are conjugated". The poem comprises not only the resulting stanzas, but all the data synchronized in unison, the relational structure of this poetic machine, desiring machine and war machine, which constantly feeds back on the information it receives from the world. [www]

These algorithms use as "food" everything previously written by humans and published on the Internet. A mechanism that results in a rather curious paradox or recursion phenomenon. Artificial texts are read mainly by artificial search engine algorithms - making them more visible in search results - and also by the NLP algorithms that created them. So, presumably, the next version of this algorithm, the GTP-4, will be "intoxicated" with the texts produced by its previous versions, altering its own results and making them progressively less human. We will then be facing an increasingly hybrid Internet with more and more artificial texts.

Perpetual Self Dis/Infecting Machine

Hand-assembled computer, virus Biennale.py, 2001, Eva and Franco Mattes

The piece, a computer that is indefinitely infected and disinfected by a virus developed by the artists themselves, presents us with a machinic Sisyphus, whose blindness prevents him from seeing the futility of his work, inexorably obeying the commands written in his memory.

5 Katherine Hayles (2014).

6 Tay was an artificial intelligence conversation bot for Twitter created by Microsoft in 2016. Tay caused controversy for delivering offensive messages and was decommissioned after 16 hours of launch.

We are seeing how computer code is advancing and infiltrating all the strata within its reach, whether at the level of large infrastructures or in small everyday operations. It manages the production of goods, pilots drones, operates in the financial markets, but also helps us and converses with us through virtual assistants or embellishes our photos and selfies to improve our positioning in social networks.

But despite the artificial seduction of its artificial "voice", the avant-garde designs in which it wraps itself and its pleasant tactile surfaces that we caress countless times a day, the computer code is not representative. Its action is defined by its condition of a-signifying semiotics 7, thus becoming invisible to our perception. It has a structure of ones and zeros that is shaped by the hardware and emerges from it without generating any sign that can be recognizable to the human being: only cuts and flows of electricity.

Untitledocument

Website, 2005, Ciro Múseres

This work proposes a blind navigation with no horizon in sight. It immerses us in a landscape conformed only by signs that as humans mean nothing to us, by commands or machinic codes, articulated in an architecture also defined by the "geographical accidents" of the Internet browser. Pop-up windows, scroll bars, pull downs, give shape to a flat, non-representative, assigning and untitled universe. A universe that turns our navigation into an aimless journey where the only possible interaction is with the interface itself. [www]

It can be confusing that software is composed of several layers - between the hardware and the end user - with different levels of representation. The graphical interface layer is the one to be understood by the human operator, but underneath, we will find other increasingly cryptic layers, such as the operating system, the BIOS, the Kernel, and other interpreters, up to the machine language itself: the pure allocator code, composed of sequences of ones and zeros. Every digital file, whether text, images or sounds, is "written" in this binary language.

//**Code_UP

Online project, 2004, Giselle Beiguelman

In this project, the artist reminds us that digital images are the product of language. Of a strictly programmed language. Starting from Antonioni's film, Beiguelman experiments with the different parameters that "describe" the digital image and places us in a trap similar to the one set by Antonioni. Like the photographer in Blow Up, we finally find that the documentary evidence does not seem to account for any reality. [www]

For G. Deleuze and F. Guattari, there are two modes of control over society: social subjection and machinic servitude 8. While the former is a mechanism of social subjugation of the individual and acts at the molar level through behavioral coding strategies, machinic servitude or slavery operates at the infrapersonal and infrasocial level, that is, at the molecular level, through non-linguistic messages -such as computer code-.

In the times when these concepts were defined, account was taken, for example, of the impact of television and the way in which its messages made their way not only through language, discourses, and other modes of subjectivation, but at the same time, a-significant signs were emitted that had an impact as affects or percepts, trying to reach strata beyond the linguistic and the representational.

This conception does not cease to be anthropocentric, since what we have today are agencements in which the human being no longer functions as the receiver of subliminal propaganda or as the target of ideological bombardment, but as one more gear - either as a producer of data or as a consumer - within a heterogeneous machine controlled by the algorithms that regulate it.

So much for self control

Animated GIF (limited edition), 2019, Martina Menegon

The emergence of cyberspace has implied a need to redefine the new role of the subject, its limits, its "physicality" as a virtual entity. How is the space of the self negotiated in a machinic agency? What happens when it does not respond to the function it has been assigned as a gear? The work makes visible what this error in the system generates: the perpetual negotiation, the resistance, the line of flight, the sabotage to self-control. [www]

But beyond this clarification, the two forms of social control remain. On the one hand, subjection, when we sit down to operate a program or interact socially on the Internet under rules that are imposed on us and that we do not really understand, and on the other hand, servitude, when we are hypnotized by a cascade of "instagrammed" images, we caress a sweetened interface that envelops us, or we click here and there, narcotized and almost without resistance. No more resistance than when we accept the cookies, or the "terms and conditions" when we install, excited, a new application that we no longer remember what it was for.

McLuhan warns about this new scenario imposed by technology, leading him to reformulate his famous phrase about the media in these new terms: "the medium is the massage" 9.

Faced with this disruptive scenario, economic flows have also undergone a revolution. In the 2017 ranking, for the first time, the five largest companies in the world were from the digital sector. Oil companies, financial companies, pharmaceutical companies, and electricity companies were relegated below Apple, Google, Microsoft, Amazon, and Facebook.

The Internet . Express

Website, 2017, Jonas Lund

“Browsing often feels like you’re on a high speed express train, surrounded by companies that want to capture your clicks, show you ads and monetize your behaviour, so theinternet.express puts you to the test, can you avoid the big five, racing against you, or will you crash into them and be subjected to even more ads?”, says J.Lund.

The new ecosystem, in which the big five fish move at will, in turn establishes a fractal dynamic towards the smaller ones: a new economy based on "like", followers and influencers.

[www]

As we have already seen, the new economies are relying more and more on our free and voluntary work - whether in the form of Internet searches, likes on social networks, or recommendations in online stores - transforming us into their product, and exploiting what we could define as the gold of the 21st century.

Despite living in a panopticon of hypervigilance, we are far from those repressive and dictatorial dystopias of science fiction. We are being watched, yes, but those who spy on us - the big digital corporations - are not really interested in what we do with our lives. Unless we break some law - and therefore our data is immediately handed over to the security agencies - we can upload the most absurd material to the network, which will only be decoded and tagged by algorithms that are not interested in its meaning but in its sign-value. It is not so much like a surveillance network as a data-fishing network. To do so, they will fight for our attention by competing to give us the best interface, the most pleasant, the most innovative, the most addictive, in exchange for our contribution to their task of massive interception of "Big Data".

But at the same time as we create surplus value, our clicks generate a productive spiral that determines the future of consumer objects. Our data flow, the information we leave on the network, is used in the design of new products. Data-driven products - products designed from data - have already begun to flood the market. And these are not only technological gadgets but also cultural products, books, movies, or even political speeches.

As we can see, the first step is to profile, classify, assign descriptor labels, even in isolation. But this then makes it possible to generate a whole system of relationships between them, or, in other words, to create a new ontology 10.

The tags, the keywords, constitute by themselves a changing ecology in the network, on the one hand, affected by flows and intensities that make the words go up or down in value, but on the other hand, they shape the network thanks precisely to the value they acquire. Not the use value, perhaps the exchange value, but above all the sign-value 11.

Customers who also bought

Custom Software, Amazon website, Duratran Prints, LED Panels, 2017, Matthew Plummer Fernández

This work consists of a series of portraits generated using software that collects data from other products that customers have purchased on Amazon's website. The images of these grouped items make visible how each type of consumer is algorithmically profiled and classified. But at the same time, it reminds us that our data streams, the information we leave on the web, is used in the design of new products. [www]

Keywords are fundamental to position pages, products, brands (of objects, personalities, or politicians). Their changing value can be measured daily and is what GoogleAds charges us if we want to advertise with them. SEO copywriting 12, i.e. texts written only to be read by Google's algorithm in order to be positioned at the top of the search results, is mainly nourished by these words, to which the eventual clicks of users will add more value.

Word Market

Internet Portal, 2012, Belén Gache

Word Market is an Internet portal dedicated to buying and selling words, using a special currency: the Wollar. In times of increasing privatization of public spaces and profusion of copyright laws, Word Market allows users to trade and profit from words and their fluctuating values. The buyer also receives a "Cease and desist" clause to send to those who intend to "illegally" use his words and a certificate of ownership to be able to sue anyone who, in spite of this, insists on using them. [www]

Another SEO strategy is to build a network of cross-links between different web pages, since another of the things that Google's algorithm looks at closely is how well a page is related to the general ecosystem, how high it is placed in this new capitalist pyramid. Pages with higher authority spill their power over the others, improving their prestige, which in turn spill it over to the pages they link to, in a fractal dynamic of measurable intensities that specialists baptized as link juice. All these variables are measurable, and we can consult this information by paying considerable monthly sums to the companies that developed these analysis algorithms.

Within this agencement, this collective machine in which we participate, somewhere among the "likes", among the words we search for, among those we follow and those who follow us, the flows of desire are built.

For Deleuze and Guattari, desire is production. Desiring production is nothing more than social production, it produces the real. The desiring machine would occur not in someone, but in the line of encounter between elements of an agency. Hence, desire has no subject and does not tend towards an object 13.

If we have all the necessary information, desire is measurable, analyzable, and in some way, predictable. One of the great differential advantages of Amazon is its fast delivery system. In many countries the delay is one day and, in some cities, a few hours. This is all well and good, but there is room for improvement: the fastest way to deliver a product would be to ship it before the customer buys it. Working on that idea, Amazon has already patented an early delivery system based on predictive analytics of information accumulated about its customers, based on their purchase history and consumption habits.

The use of data at algorithmic speed is not only being exploited in the areas of production or marketing, but also in the financial sphere, where today a large part of the decisions are made by algorithms. Stock market robots buy and sell at breakneck speed, and with a heart like ice, with no eyes other than to accumulate some profit at the end of the day, regardless of sending companies into bankruptcy or other "collateral damage". This practice, called High-Frequency Trading, in which the human trader only functions as a spectator, already makes up 75% of the trades made daily on the US stock exchanges and almost 50% on the European exchanges.

The decoding and deterritorialization promoted by capitalism, with its dynamic of erosion of all kinds of defensive barriers against globalization, is on the way to being the executioner of its supposed leaders and promoters: capitalism does not need leaders or promoters, since its decoding force tends to disrupt any pre-established hierarchy. Banking, which has driven the digitalization of the economy to favor free trade and the immediacy of transactions, is seeing how electronic money has become the source of its own destruction. The electronic banking system has prepared the conditions for the emergence of another type of virtual money: P2P cryptocurrencies - such as Bitcoin, for example - whose main characteristic is to avoid the regulatory control of banks and states. This has led to the emergence of P2P lending, which, sooner or later, will lead to the disappearance of banks as we know them today.

Total dematerialization has already begun. We see it in the case of several Nordic states that have announced the withdrawal of material banknotes, smartphone payment in more and more stores or transport and in general, with the decreasing circulation of cash that has promoted the pandemic.

The Next Coin

Cryptocurrency Generator, 2018, Martin Nadal

The Next Coin explores the cryptocurrency fever and proposes a reflection on the true value of money and how it is generated. After all, isn't all money generated from nothing? If, when the dollar abandoned the gold standard, the economy was plunged into a spiral of virtuality, with the appearance of electronic money and later cryptocurrencies, we have now entered the phase of total dematerialization. [www]

As Baudrillard would point out, we are entering the domain of the virtual economy, now definitively liberated from real economies 14.

Focusing only on the big corporations, on the big "players" in the distribution of wealth on the Internet, may prevent us from seeing other phenomena that also arise from the digital, but which establish lines of flight to unforeseen events. Big fish can be threatened if a multitude of small fish organize against them. This is the case of Gamestop, where a crowd of individual investors who used to frequent Reddit's WallStreetBets forum, decided to unite and go in the opposite direction of some hedge fund giants, who were betting on the fall of GameStop's shares, a sort of Blockbuster of console video games. Using the same digital tools, but using certain weaknesses in the system, retro-engineering tactics, and resilience, they managed to make these speculative companies lose billions of dollars and at the same time rescue GameStop from bankruptcy. At least for a few weeks. Nothing lasts forever in the digital domain.

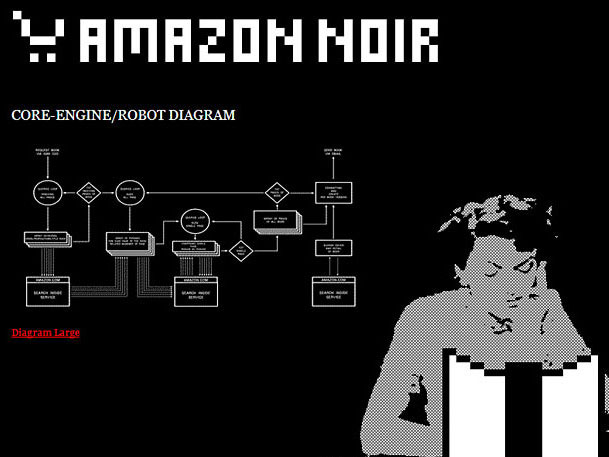

Amazon Noir (Hacking Monopolism Trilogy)

Actions on the Internet, 2006, Ubermorgen, Paolo Cirio and Alessandro Ludovico

This trilogy of algorithm-works exploited the technical and economic vulnerabilities of three major Internet companies, Facebook, Amazon and Google, reconfiguring the way they concentrate, misappropriate and monetize a vast amount of user information and interactions. These interventions aimed to destabilize their economic and marketing models, making visible some of the economic implications of the Internet, such as invasive advertising, user data mining and the commercialization of intellectual property. [www]

11 "Once, out of some obscure need to classify, I proposed a tripartite account of value: a natural stage (use-value), a commodity stage (exchange-value), and a structural stage (sign-value) . […] So let me introduce a new particle into the microphysics of simulacra. At the fourth, the fractal (or viral, or radiant) stage of value, there is no point of reference at all, and value radiates in all directions, occupying all interstices, without reference to anything whatsoever, by virtue of pure contiguity”. Jean Baudrillard (1993).

12 Search engine optimization (SEO) is the process of improving the quality and quantity of website traffic to a website or a web page from search engines. As an Internet marketing strategy, SEO considers how search engines work, the computer-programmed algorithms that dictate search engine behavior, what people search for, and the actual search terms or keywords typed into search engines by their targeted audience.

The simulacrum precedes reality. In the hyperreality of social networks, it is the code that shapes the real. Baudrillard 15, when he takes Borges' story, where the cartographers of the Empire draw a map that ends up covering exactly the territory, will say that it is the map, its cartographies, its coordinates that precede, supplant, and engender the territory. Today, GoogleMaps, with its coordinates marked by desire, by likes, by recommendations, by followers and haters, redefines the territory, assigns sign-value to each region, traces its routes and paths.

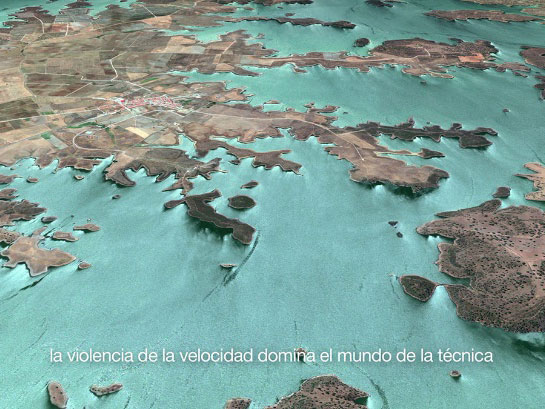

Guadiana

Video, 2017, Antoni Muntadas

A journey along the entire length of the river through the use of images taken from Google Maps. What appears to be an enigmatic visual tour, becomes however a deep analysis on notions such as deterritorialization and reterritorialization, drift and control, as we move through a landscape traversed by flows, accumulations, appearances and disappearances.

The use of satellite images, and the corresponding shift from horizontal landscape to zenithal landscape, increases this tension between the visual and the analytical, between map and territory, between nature and code.

The products of online retail stores, telemarketers or even home deliverers, but also cultural, academic, or artistic products are exposed to this new dynamic generated by recommendation algorithms. In the same way that Uber's algorithm becomes the boss of thousands of drivers, the structure of "likes" shapes a complex seduction mechanism based on followers, in which, like an enigmatic flock of birds, we never know whether it is the leader who leads the flock of followers, or whether it is the followers' "likes" that modify the leader's route.

This dynamic configures a new pyramid of power, a pyramid of electronic capitalism that we could represent in a similar way to the famous illustration published in the Industrial Worker 16. Only that, at the base of the pyramid, instead of workers or peasants we will have passive followers or "rebloggers", while at the top, instead of monarchs or rulers, we will have the "influencers", the most followed on social networks. While this phenomenon may seem trivial, it should be borne in mind that the same dynamic is fractally reproduced in other spheres. Not only does it act on social networks, but it also organizes and structures all web content into hierarchies. Whether banal or informative, academic, or artistic texts, only those that make it to the first pages of Google search results will survive. And in turn, in a similar way, the information to feed artificial intelligence systems will be "valued". Value-sign that is imposed in all our interactions on the network.

Immersed in this dynamic, what initially pushes us is the desire to move up the pyramid, but what appears immediately afterwards is the fear of descending, of being ignored, of having more hands at the bottom than at the top. Although there is also the fear of exclusion through oblivion, if we cease to be present. Algorithms penalize the lack of activity, as much or more than the lack of recommendations, so if activity decreases, we also go down the pyramid.

Trash Facebook

Intervened web page, 2019, Michaël Borras a.k.a SYSTAIME

This piece makes us reflect on our own participation in networks and their projection into a dystopian future. Systems that seem to have their own rules -that we don't even know-, with algorithms that lead us where we don't necessarily want to go -but that we end up accepting as a result of our decisions- and in which our interactions are just formalisms so that, hypnotically, we continue to feed Big Data collecting systems. [www]

Not only in social networks but all over the web there are these types of penalties for inactivity. What Google's algorithm promotes are fresh posts -known as freshness in SEO jargon-, since its algorithm considers that what is new will always be the best. In hyperreality, the high definition of time corresponds to real time 17. It is the point where linear history and the idea of progress inherent to modernity are no longer possible; where events can no longer occur, overridden by the immediacy of real time.

But whether driven by fear or ambition, faced with the demand and the mandate to move up, it will always be possible to resort to buying links or followers. For less than a thousand euros and in a matter of hours, we can obtain about twenty quality links or a hundred thousand followers, in any of the countless SEO positioning websites.

The digital accentuates the immobility that ubiquity allows. Why travel when you can be in several places at the same time? Why expose yourself to the virus if you can telework?

Telepresence marks new behaviors and new social protocols. Facebook, Twitter, Instagram, but also Skype, Zoom, Twitch and even Second Life serve us for all kinds of excursions, confessions, seductions, and exchanges. But unlike the unidirectional experience provided by television and the consequent reception by the viewing public of a uniform discourse - a dynamic that would lead McLuhan to develop his concept of the Global Village - the Internet, with its millions of avatars connected point to point, makes the Global Village explode into thousands of global villages, all similar but different, just as the walls of each Facebook user are different.

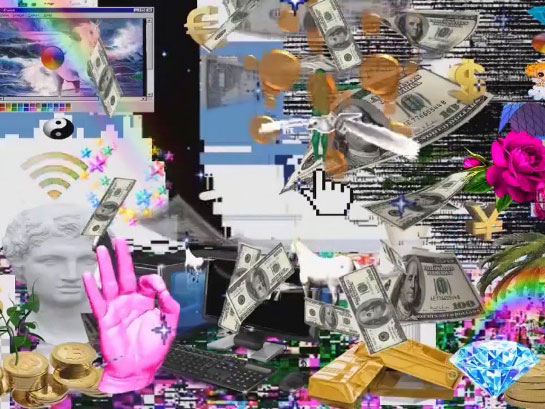

Happy Deep Love

Online video, 2019, Michaël Borras a.k.a SYSTAIME

The artist remixes web images, uses their graphics and their ornamentations, and builds with them an iconic landscape of today's digital pop culture in which, in contrast to what the title of the work announces, all depth is annulled and in which what we see, is enclosed strictly on the plane of its surface. A hyperreal surface of abundance, of desires, of pleasure, that only has entity within the limits of the screen. [www]

Digital networks function as a simulacrum of a mirror in which our virtual selves are seen. Their algorithms show us what we like and only what we like. We see who follows us or who we follow. Surrounded by advertisements of the products the AI thinks -knows- we want to buy.

We enter the web to relate to the other, to what is outside, to what is different and, however, the only thing we see is what is reflected by our click history, by the information collected as a result of the orders we have obediently obeyed: SEARCH, BUY, ADD TO BASKET, ADD TO WISH LIST, FOLLOW, STOP FOLLOWING, RECOMMEND, ACCEPT, ACCEPT, ACCEPT, ACCEPT, ACCEPT.

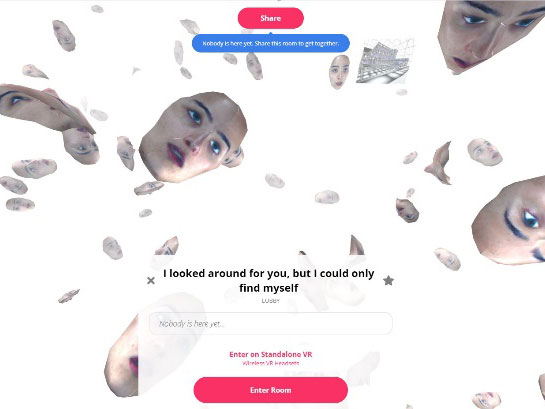

I looked around for you, but I could only find myself

WebVR Installation, 2020, Martina Menegon

This virtual environment, in which users have the option of becoming part of the work using the same "face" as the artist's avatar, presents the digital as a mirror. In the Internet 2.0, algorithms only show us what we "like", what we "follow", what we consume or what we "look for". [www]

The network gives us back a virtual universe molded in the image and likeness not of our self, but of our simulacrum. In virtual interaction, there are no exchanges other than those preceded by the algorithm, by the map, by the code. It is the ecstasy of communication 18 that annuls all possible communication.

IP Address: 81.39.251.110 (Madrid)

Unix Timestamp : 1613322476 (February 2021)

www.gustavoromano.org

18 “The private universe was certainly alienating, insofar as it separated one from others, from the world in which it acted as a protective enclosure, as an imaginary protector. Yet it also contained the symbolic benefit of alienation (the fact that the other exists). […] Obscenity begins when there is no more spectacle, no more stage, no more theatre, no more illusion, when everything becomes immediately transparent, visible, exposed in the raw and exorable light of information and communication. We no longer partake of the drama of alienation, but are in the ecstasy of communication”. Jean Baudrillard (1988).

Baudrillard, Jean. (1981). Simulacra and Simulation. Michigan: University of Michigan Press

Baudrillard, Jean (1991). La transparencia del mal. Barcelona: Anagrama.

Baudrillard, Jean (1996). El crimen perfecto. Barcelona: Anagrama.

Baudrillard, Jean (1997). El otro por sí mismo. Barcelona: Anagrama.

Baudrillard, Jean (2000). Pantalla total. Barcelona: Anagrama.

Deleuze, Gilles y Guattari, Félix (1985). El Antiedipo, Capitalismo y Esquizofrenia. Barcelona: Paidós.

Deleuze, Gilles y Guattari, Félix (2004). Mil mesetas, Capitalismo y Esquizofrenia. Valencia: Pre-textos.

Guattari, Félix (1996). Caosmosis. Buenos Aires: Manantial.

Guattari, Félix (2004). Plan sobre el planeta. Madrid: Traficantes de Sueños

Hayles, N. Katherine (2014). Cognition Everywhere: The Rise of the Cognitive Nonconscious and the Costs of Consciousness. New Literary History, 45(2), 199-220.

Karpathy, Andrej (2017) "Software 2.0", Medium. Recuperado el 6 de abril de 2018 de https://medium.com/@karpathy/software-2-0-a64152b37c35

Kittler, Friedrich (1999) Gramophone, Film, Typewriter. Stanford, California: Stanford University Press.

Kittler, Friedrich (2009) Optical Media. Cambridge. UK: Politi Press.

Kittler, Friedrich (1995) "There is no software", CTheory. Recuperado el 14 de junio de 2016 de www.ctheory.net/articles.aspx?id=74

McLuhan, Marshall y Fiore, Quentin (1967). The medium is the massage. Berkeley: Gingko Press.

McLuhan, Marshall (1996). Comprender los medios de comunicación. Barcelona: Paidos.

NETESCOPIO | MEIAC 2021